(had an error submitting this post... if double post, sorry, please delete double, thanks)

Hello,

I'm running OMV on a rapberry pi 3 for just a couple weeks. The whole system is a small NAS (raspi running OMV image only, nothing else installed + 2 identical external 4TB hdd each running on mirror raid) designed for a home backup of important datas.

For some reason i had to move the whole thing in another location in my apartment.

Before moving the stuff, i properly shut it down from the web admin page, then unplugged the drives (taking note of each drive's ID, position in the USB ports etc...)

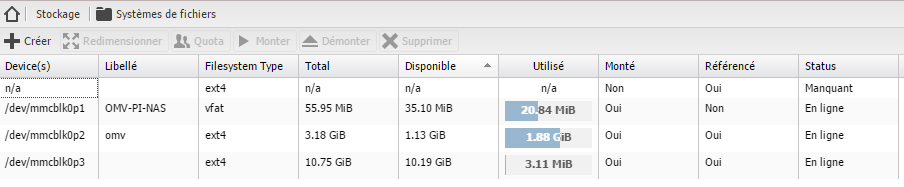

When replugged on the new position, it started just fine, the only problem is that the RAID list is empty and the system file list is showing somthing a bit scary for the safety of my datas :

Obviously i need to recover the "n/a" device which is the RAID unit made of my 2 hdd, which are both correctly listed in the physical drives list.

So refering to your list of elements needed,

(Click to set source)

If you have a degraded or missing raid array, please post the following info (in code boxes) with your question.

Login as root locally or via ssh - Windows users can use putty:

- cat /proc/mdstat

- blkid

- fdisk -l | grep "Disk "

- cat /etc/mdadm/mdadm.conf

- mdadm --detail --scan --verbose

- Post type of drives and quantity being used as well.

- Post what happened for the array to stop working? Reboot? Power loss?

[/quote]

here are the results on my system:

root@pi-nas:~# cat /proc/mdstat

Personalities :

unused devices: <none>

root@pi-nas:~# blkid

/dev/mmcblk0p1: SEC_TYPE="msdos" LABEL="OMV-PI-NAS" UUID="7D5C-A285" TYPE="vfat" PARTUUID="000b5098-01"

/dev/mmcblk0p2: LABEL="omv" UUID="5d18be51-3217-4679-9c72-a54e0fc53d6b" TYPE="ex t4" PARTUUID="000b5098-02"

/dev/mmcblk0p3: UUID="fa36508a-b3c4-4499-b30a-711dd5994225" TYPE="ext4" PARTUUID ="000b5098-03"

/dev/sdb: UUID="03adbb27-4386-5f14-c783-c3d1a48d1ee4" UUID_SUB="55ed7cc3-06d6-e5 df-c3ef-89483d8c23a9" LABEL="pi-nas:nas" TYPE="linux_raid_member"

/dev/sda: UUID="03adbb27-4386-5f14-c783-c3d1a48d1ee4" UUID_SUB="74e6c156-7b20-66 6b-f6f1-bc25ab05f6a9" LABEL="pi-nas:nas" TYPE="linux_raid_member"

/dev/mmcblk0: PTUUID="000b5098" PTTYPE="dos"

root@pi-nas:~# fdisk -l | grep "Disk "

Disk /dev/ram0: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram1: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram2: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram3: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram4: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram5: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram6: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram7: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram8: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram9: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram10: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram11: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram12: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram13: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram14: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/ram15: 4 MiB, 4194304 bytes, 8192 sectors

Disk /dev/mmcblk0: 14.5 GiB, 15523119104 bytes, 30318592 sectors

Disk identifier: 0x000b5098

Disk /dev/sdb: 3.7 TiB, 4000787025920 bytes, 976754645 sectors

Disk /dev/sda: 3.7 TiB, 4000787025920 bytes, 976754645 sectors

root@pi-nas:~# cat /etc/mdadm/mdadm.conf

# mdadm.conf

#

# Please refer to mdadm.conf(5) for information about this file.

#

# by default, scan all partitions (/proc/partitions) for MD superblocks.

# alternatively, specify devices to scan, using wildcards if desired.

# Note, if no DEVICE line is present, then "DEVICE partitions" is assumed.

# To avoid the auto-assembly of RAID devices a pattern that CAN'T match is

# used if no RAID devices are configured.

DEVICE partitions

# auto-create devices with Debian standard permissions

CREATE owner=root group=disk mode=0660 auto=yes

# automatically tag new arrays as belonging to the local system

HOMEHOST <system>

# definitions of existing MD arrays

ARRAY /dev/md0 metadata=1.2 name=pi-nas:nas UUID=03adbb27:43865f14:c783c3d1:a48d1ee4

# instruct the monitoring daemon where to send mail alerts

MAILADDR admin@sebastienbourgine.com...

MAILFROM

root@pi-nas:~# mdadm --detail --scan --verbose

root@pi-nas:~#

I sincerely hope you'll have some clue to help me sort this...

Thanks in advance !