Hello,

is there any default buffer option with OMV? Or is it normal with RAID1?

My problem:

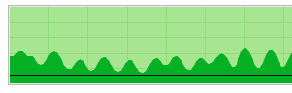

When I'm trying to copy some file to NAS via SMB it looks like this:

~160MB/s - ~30MB/s all the time.

Thank you for your time.

Hello,

is there any default buffer option with OMV? Or is it normal with RAID1?

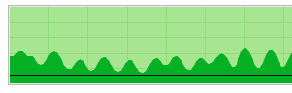

My problem:

When I'm trying to copy some file to NAS via SMB it looks like this:

~160MB/s - ~30MB/s all the time.

Thank you for your time.

Up-s

I'm ok, just how should that link help me? ![]()

I wanted to enable some cache for HDD writes and this link is about opposite or not?

I wanted to enable some cache for HDD writes and this link is about opposite or not?

IN OMV | Storage | Disks select a disk and edit it. Enable write cache.

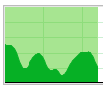

Didn't help ![]()

Same issue here with a PI 4

Up-s

By default ALL free memory in Linux is used for disk caches.

However write caches are intentionally kept small, to avoid problems in case of power failure. Anything still in dirty write cache will be lost. So things are written ASAP, and the dirty write cache pages becomes clean read cache pages.

It is possible to relax the pressure to write dirty cache pages.

However this is also easy to mess up, so the server is slower than when you started. It is a good idea to measure before and after you change anything. And before you start delaying writes, you really should get a UPS.

Here is one article where this is discussed:

https://lonesysadmin.net/2013/…rformance-vm-dirty_ratio/

If you google stuff like "vm.dirty_ratio linux" you will find more.

This may help because data in the cache can be reordered and combined into larger sequential writes. And large sequential writes are much faster than small random writes. However, RAID may work against that, depending on how it is set up. A large write cache can even make it possible to discard all but one writes of the same data.

Normally you should have no need to mess with vm.dirty_ratio and so on. If you think you need to, something else may be seriously messed up. Like using SMR drives in a RAID configuration. Or something completely unrelated may be wrong like the router or a network cable. Or a broken HDD.

Do some testing first, with a single non-SMR drive, formatted as EXT4, instead of the RAID array. Then you may be able to close in on what really is your problem.

Sorry for my late answer.

Thank you for all that info. i thought it will be some easy thing to setup.

Sie haben noch kein Benutzerkonto auf unserer Seite? Registrieren Sie sich kostenlos und nehmen Sie an unserer Community teil!