Hi everyone and excuse for my poor english, i will try my best.

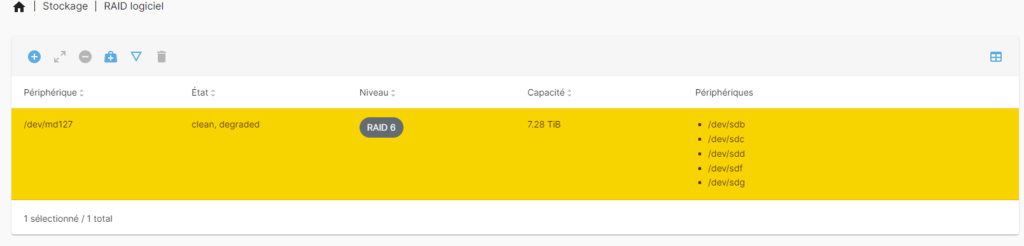

I loose my RAID6 in OMV. I don't know how and when, sorry.

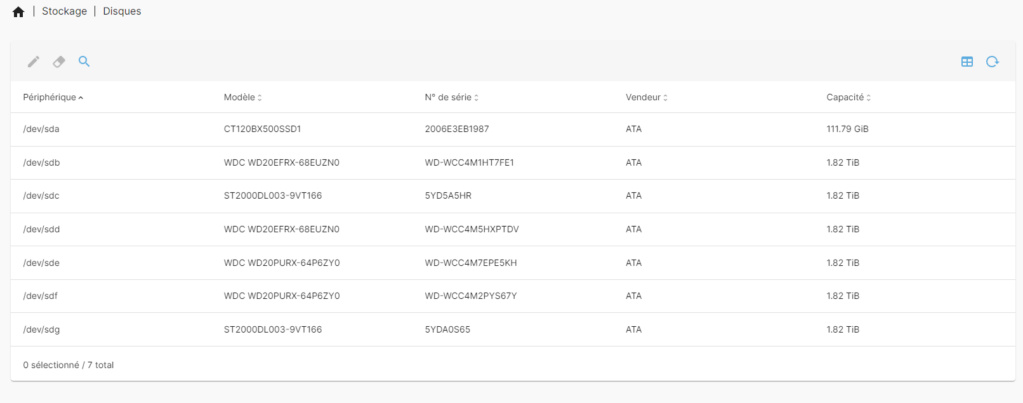

It seems that the sde disk is missing in the cluster, which is well recognized under omv, but the raid is not available...

As request by moderator :

Code

root@NAS-Clauce:~# cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md127 : inactive sdb[5](S) sdg[4](S) sdf[3](S) sdc[0](S) sdd[1](S)

9766912440 blocks super 1.2

unused devices: <none>

root@NAS-Clauce:~# blkid

/dev/sdb: UUID="e2c2cb4c-3016-aaf3-85eb-945434697d09" UUID_SUB="30619ac9-18c1-234f-bab7-20a703821dfd" LABEL="NAS-Clauce.local:nAsRaid6" TYPE="linux_raid_member"

/dev/sda1: UUID="c9d17701-fd97-441d-8cee-dd016bbf61d6" BLOCK_SIZE="4096" TYPE="ext4" PARTUUID="cbe3b062-01"

/dev/sda5: UUID="3d257171-4b7a-4340-93bb-984a6dff68b0" TYPE="swap" PARTUUID="cbe3b062-05"

/dev/sdc: UUID="e2c2cb4c-3016-aaf3-85eb-945434697d09" UUID_SUB="9a8b8a48-3f16-a7ea-3ba4-ce98d62c82c2" LABEL="NAS-Clauce.local:nAsRaid6" TYPE="linux_raid_member"

/dev/sdd: UUID="e2c2cb4c-3016-aaf3-85eb-945434697d09" UUID_SUB="0a8d8147-3bf0-390f-40a0-616322cab317" LABEL="NAS-Clauce.local:nAsRaid6" TYPE="linux_raid_member"

/dev/sdf: UUID="e2c2cb4c-3016-aaf3-85eb-945434697d09" UUID_SUB="5a9a2cbe-f33d-9c52-b4b2-b1e009620b7f" LABEL="NAS-Clauce.local:nAsRaid6" TYPE="linux_raid_member"

/dev/sdg: UUID="e2c2cb4c-3016-aaf3-85eb-945434697d09" UUID_SUB="f928ff46-faf9-37ea-9651-7aa2f85740bf" LABEL="NAS-Clauce.local:nAsRaid6" TYPE="linux_raid_member"

root@NAS-Clauce:~# cat /etc/mdadm/mdadm.conf

# This file is auto-generated by openmediavault (https://www.openmediavault.org)

# WARNING: Do not edit this file, your changes will get lost.

# mdadm.conf

#

# Please refer to mdadm.conf(5) for information about this file.

#

# by default, scan all partitions (/proc/partitions) for MD superblocks.

# alternatively, specify devices to scan, using wildcards if desired.

# Note, if no DEVICE line is present, then "DEVICE partitions" is assumed.

# To avoid the auto-assembly of RAID devices a pattern that CAN'T match is

# used if no RAID devices are configured.

DEVICE partitions

# auto-create devices with Debian standard permissions

CREATE owner=root group=disk mode=0660 auto=yes

# automatically tag new arrays as belonging to the local system

HOMEHOST <system>

# instruct the monitoring daemon where to send mail alerts

MAILADDR fournier.thibaut@gmail.com

MAILFROM root

# definitions of existing MD arrays

ARRAY /dev/md/NAS-Clauce.local:nAsRaid6 metadata=1.2 name=NAS-Clauce.local:nAsRaid6 UUID=e2c2cb4c:3016aaf3:85eb9454:34697d09

root@NAS-Clauce:~# mdadm --detail --scan --verbose

INACTIVE-ARRAY /dev/md127 num-devices=5 metadata=1.2 name=NAS-Clauce.local:nAsRaid6 UUID=e2c2cb4c:3016aaf3:85eb9454:34697d09

devices=/dev/sdb,/dev/sdc,/dev/sdd,/dev/sdf,/dev/sdgSomeone can help me ?

Maybe the problem has been dealt with in another topic but it's impossible for me to know if it's exactly the same problem.

Thx a lot in advance, i'm completely lost !!! ![]()

![]()

![]()