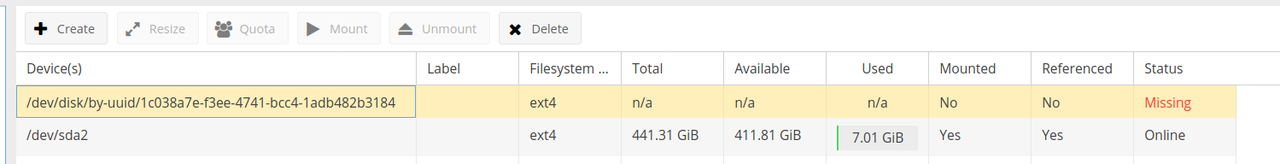

My dying HDDs:

The drives above normally operate around 40-42° (not ideal from what I've read), but I took the screenshot above with the case open.

cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4] [linear] [multipath] [raid0] [raid1] [raid10] md127 : active raid5 sdd[1] sdc[2] 15627788288 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU] bitmap: 0/59 pages [0KB], 65536KB chunkunused devices: <none>

blkid

/dev/sda1: UUID="EAE0-223A" TYPE="vfat" PARTUUID="e9d78ebc-e99d-434f-8ac9-6bdb8b73abbe"

/dev/sda2: UUID="c5b77818-f6ec-41b4-8e82-c20a5159d75a" TYPE="ext4" PARTUUID="83069319-4734-44d9-aeda-70cead2edf13"

/dev/sda3: UUID="71435388-ef67-401d-a88f-674167bc4a7c" TYPE="swap" PARTUUID="95f4dee0-acbb-4046-8772-6969a341c972"

/dev/sdb: UUID="f1d3e5d2-a10e-7ea5-82ac-9b10c0fbebcf" UUID_SUB="81cd3ec1-5739-c9a5-3005-7b930411eebb" LABEL="tomanas1raid5" TYPE="linux_raid_member"

/dev/sdc: UUID="f1d3e5d2-a10e-7ea5-82ac-9b10c0fbebcf" UUID_SUB="5000e24a-eef1-4871-7864-ce76f25d776a" LABEL="tomanas1raid5" TYPE="linux_raid_member"

/dev/md127: UUID="83fb3f1a-d032-42b3-bf7d-727059721db6" LABEL="omv1raid5" TYPE="crypto_LUKS"

/dev/sdd: UUID="f1d3e5d2-a10e-7ea5-82ac-9b10c0fbebcf" UUID_SUB="e651a4a2-7d57-a0e8-81cf-e05becf36754" LABEL="tomanas1raid5" TYPE="linux_raid_member"

/dev/mapper/md127-crypt: LABEL="omv1raid5" UUID="ab93b8c5-d3b4-46d1-8c61-3c62e49335c8" TYPE="ext4"/dev/sdf: UUID="9b85259f-51b1-41fb-8782-030d182ef28d" TYPE="crypto_LUKS"

/dev/sde1: UUID="39d0a4fd-243b-4dc0-9e99-c74e372d7931" TYPE="crypto_LUKS"

/dev/mapper/sde1-crypt: LABEL="wd4.0b" UUID="0bdf3f0c-fef6-4da6-bccc-805bbf055686" UUID_SUB="bb0d3684-247f-4b7c-a514-13718788aa65" TYPE="btrfs"

/dev/mapper/sdf-crypt: LABEL="wd4.0a" UUID="b44d046c-06ca-4058-b1c2-b669e5e136f6" UUID_SUB="7ed3root@omv:/sroot@omv:/sroot@omv:/root@omvroot@ororroroorootroot@rootrootroot@oroororrroorootrorrrrororrorrrrooroot@omrooroot@omv:/srv/dev-disk-by-uuid-ab93b8c5-d3b4-46d1-8c61-3c62e49335c8/services/chendesk1#

Alles anzeigen

(I have no idea why the UUID_SUB for the last one is so long or why my setup is md127 instead md0 or something numerically lower...)

fdisk -l | grep "Disk "

Disk /dev/sda: 465.8 GiB, 500107862016 bytes, 976773168 sectors

Disk model: Samsung SSD 860

Disk identifier: 0F8D6A4F-6EDD-4EE0-96E4-F4E904EBBE0BDisk

/dev/sdb: 7.3 TiB, 8001563222016 bytes, 15628053168 sectorsDisk model: TOSHIBA MN06ACA8Disk

/dev/sdc: 7.3 TiB, 8001563222016 bytes, 15628053168 sectorsDisk model: TOSHIBA MN06ACA8Disk

/dev/sdd: 7.3 TiB, 8001563222016 bytes, 15628053168 sectorsDisk model: TOSHIBA MN06ACA8Disk

/dev/md127: 14.6 TiB, 16002855206912 bytes, 31255576576 sectorsDisk

/dev/mapper/md127-crypt: 14.6 TiB, 16002838429696 bytes, 31255543808 sectorsDisk

/dev/sdf: 3.7 TiB, 4000787030016 bytes, 976754646 sectorsDisk model: EFRX-68WT0N0 Disk

/dev/sde: 3.7 TiB, 4000787030016 bytes, 976754646 sectorsDisk model: EFRX-68WT0N0 Disk identifier: 0x993043cfDisk

/dev/mapper/sde1-crypt: 3.7 TiB, 4000783007744 bytes, 976753664 sectorsDisk

/dev/mapper/sdf-crypt: 3.7 TiB, 4000784932864 bytes, 976754134 sectors

Alles anzeigen

cat /etc/mdadm/mdadm.conf

# This file is auto-generated by openmediavault (https://www.openmediavault.org)# WARNING: Do not edit this file, your changes will get lost.# mdadm.conf

#

# Please refer to mdadm.conf(5) for information about this file.

#

# by default, scan all partitions (/proc/partitions) for MD superblocks.

# alternatively, specify devices to scan, using wildcards if desired.

# Note, if no DEVICE line is present, then "DEVICE partitions" is assumed.

# To avoid the auto-assembly of RAID devices a pattern that CAN'T match is

# used if no RAID devices are configured.DEVICE partitions

# auto-create devices with Debian standard permissions

CREATE owner=root group=disk mode=0660 auto=yes

# automatically tag new arrays as belonging to the local systemHOMEHOST <system>

# instruct the monitoring daemon where to send mail alerts

MAILADDR ----------@----------.com

MAILFROM root

# definitions of existing MD arraysARRAY /dev/md/tomanas1raid5 metadata=1.2 name=tomanas1raid5 UUID=f1d3e5d2:a10e7ea5:82ac9b10:c0fbebcf

Alles anzeigen

mdadm --detail --scan --verbose

ARRAY /dev/md/tomanas1raid5 level=raid5 num-devices=3 metadata=1.2 name=tomanas1raid5 UUID=f1d3e5d2:a10e7ea5:82ac9b10:c0fbebcf devices=/dev/sdc,/dev/sdd

Zitat

Post type of drives and quantity being used as well.

3x Toshiba MN06ACA800/JP 8TB NAS HDD (CMR)

Zitat

Post what happened for the array to stop working? Reboot? Power loss?

Array is still working, but is approaching imminent failure (I assume). I received the following email alert last week:

==========

This message was generated by the smartd daemon running on:

host name: omv

DNS domain: mylocal

The following warning/error was logged by the smartd daemon:

Device: /dev/disk/by-id/ata-TOSHIBA_MN06ACA800_11Q0A0CPF5CG [SAT], FAILED SMART self-check. BACK UP DATA NOW!

Device info:

TOSHIBA MN06ACA800, S/N:11Q0A0CPF5CG, WWN:5-000039-aa8d1b7f8, FW:0603, 8.00 TB

==========

(This was for /dev/sdd. I received an identical email for the other dying drive, /dev/sdb at the same time)

I've already have a regular backup of the data and the replacement hard drives arrived a little while ago. Since all three HDDs in this RAID5 were purchased new and installed last April, I'm also quite concerned as to why two of them would start failing this early and simultaneously. But first things first, I've been reading up on how to re-construct my setup with the replacement drives and came upon this thread/post:

What

you do is fail the drive using mdadm, then remove the drive using

mdadm, shutdown, install the new drive, reboot the raid should appear as

clean/degraded, then add the new drive using mdadm.

At least the above is the procedure anyway.

I just want to confirm I'm not misunderstaing what it means to "fail the drive using mdadm" before I do something stupid. Is the following what I should be entering via SSH/CLI?

mdadm /dev/md127 -f /dev/sdb

Also, with 2 of the 3 drives nearing failure, I'm wondering if I should just rebuild the RAID5 from zero and copy the data over from the backup, instead of trying to replace the drives one by one?

Thank you!

(edit: fixed code line breaks)