Hello,

I think about building a new NAS for my Home use only, and i need some suggestions and help what i should do.

My Idea was to custom build one.

- I want around 40tb of usable storage! (should be enough)(I think of 6x8tb hdds)

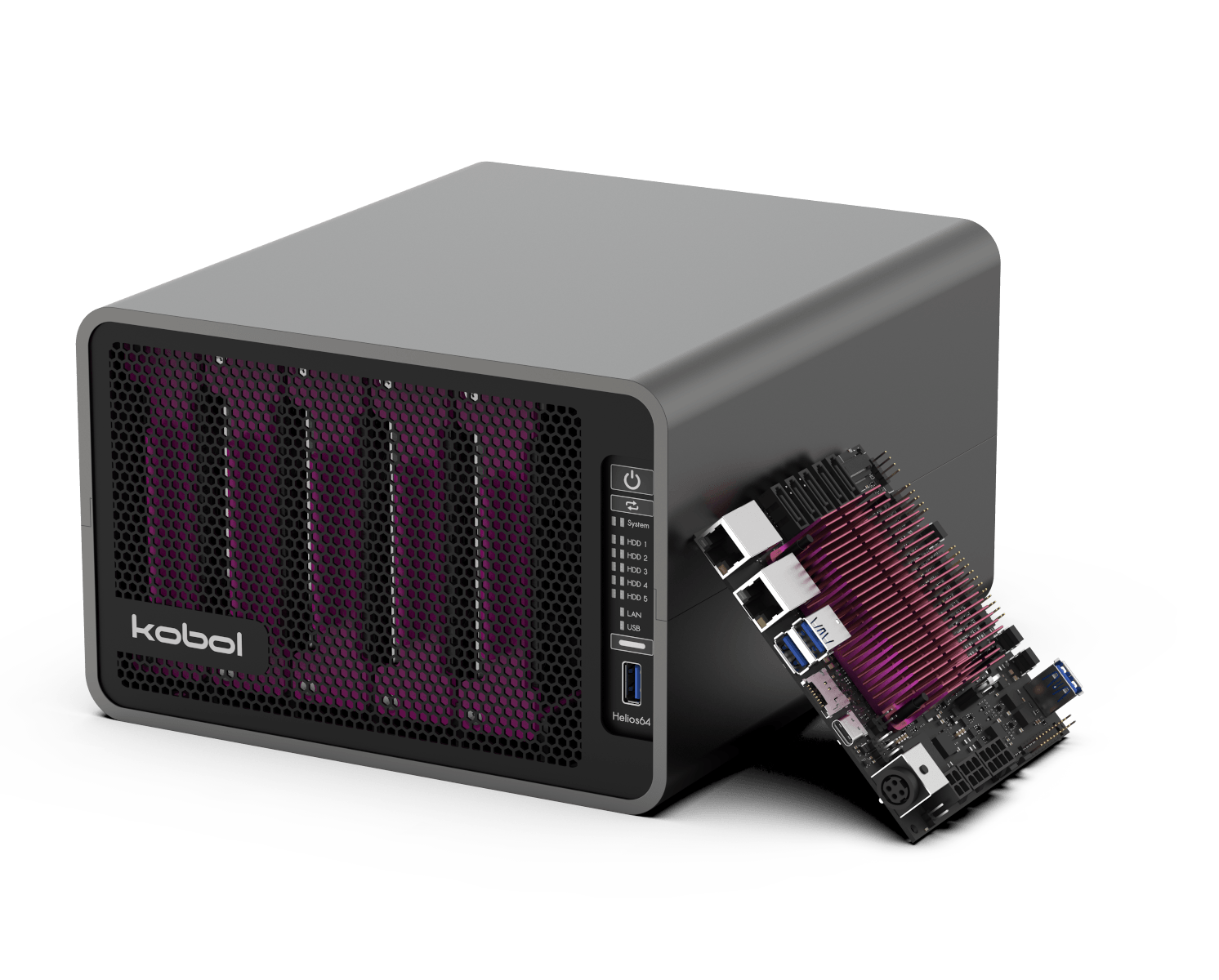

- I want to use a single board computer (SMC) because of space reasons

- I plan to use OMV5 or 6 in the future

- I want to use Portainer for Docker

- The data mostly rests on the server and is very rarely moved. Only my photography projects and movies.

- The NAS should handle my data of course, some Dockers like (Nextcloud, bitwarden, wordpress, jellyfin,(...)) and that's it. So i not need to edit videos or anything on my machine

- I plan to use external hdds as a backup

- Remember it is a home server and not a high end crazy 800mb/s transfer speed machine. So normal speed from 30 to 100mb/s is totally okey for my little use.

__________________________________________________________________________________________

Now to the questions.

- What SMC i should use? Can someone recommend a good one with many SATA ports or with circet board expension card for all the harddrives?

For me it is quite difficult to find something what i think could be suitable for me, so what do you recommend me or have experiene in?

- What Raid would you suggest me? I thought of Raid 5

- What is about Unraid and other NAS Raid softwares? I plan to use OMV (because my RP4 system also uses it and it is comfortable) but i read a lot about people saying that unraid and so on is the best software etc.

- how about software upgrade ability of the software? Will it damage the raid or my system?

Does someone have experiences with this? or can recommend me something? Also can i handle everything also in OMV or is there any difference in OMV and other software?

- What harddrives should i use? No SMB drives i have learned, but any good experience with some drives?

__________________________________________________________________________________________

This is it for now. Future questions will come up later i guess.

It would be very kind if the people who have experience and ideas would tell me

Thank you![]()