I owned a QNAP 419p+ that was doing its dirty work with just 500MB ram and a pair of 100Mbps ethernet interfaces , but it started to get old...

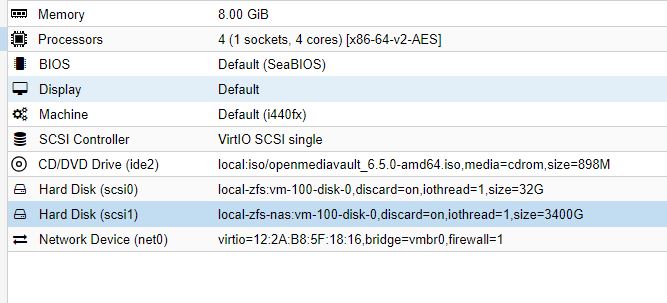

I moved to a OMV system as virtual machine in a Proxmox environment hosted by a 8th Gen HP microserver with 16GB ram.

Well, ZFS system eats by default half of RAM, so actually remaining 8GB are half splitted between OMV and another VM running a basic IP pbx

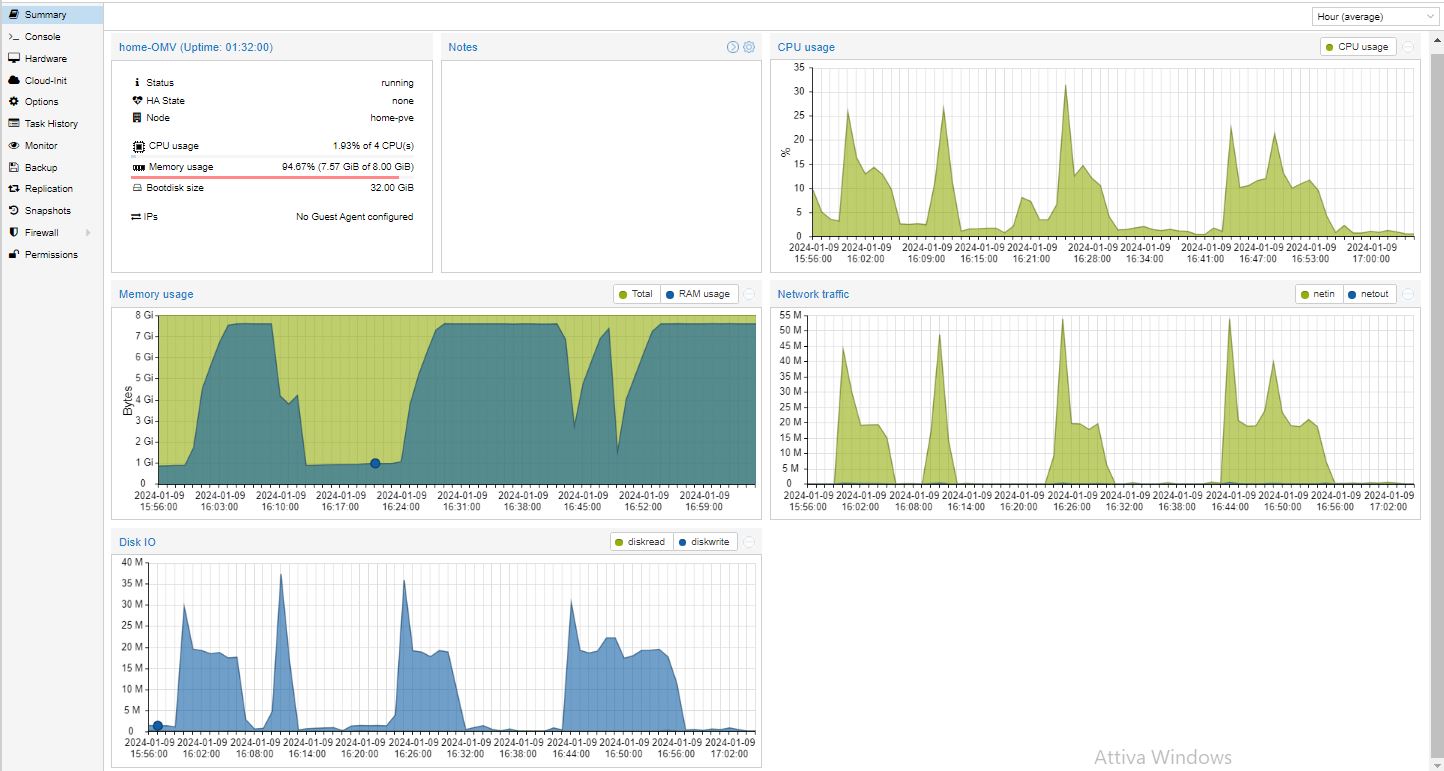

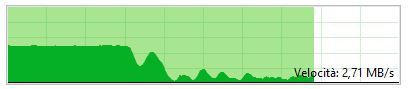

Yesterday I was transferring about 150 GB of data from my PC to OMV, experiencing 2 to 20 MBps transfer rate out of 120MBps (120*8=960Mbps) I get toward a Synology NAS on same 1Gbps lan (Proxmox/HP host is connected via a 10Gbps adapter to switch SFP+).

Top shows a little cpu utilization where, instead, Proxmox monitor shows almost all of 4GB ram is continuously used by OMV

Proxmox ZFS storage is over a pair (Raid1) of 4TB WD-red (NAS) HDD

Is the limited amount of RAM to be blamed ?

Or should I check against a bad configuration or a equipment misconception/setup ?

Or better, should I install OMVdirectly on bare metal, forgetting Proxmox environment ?

Thank you

(first 30sec runs @ 118MBps (regular 1GB eth speed))

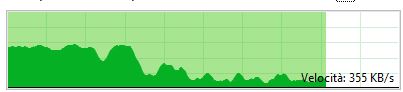

(first 30sec runs @ 118MBps (regular 1GB eth speed)) (first 30sec runs @ 85MBps)

(first 30sec runs @ 85MBps)